Time series data is rarely clean. Sensors drift, user behaviour changes, and financial prices jump for reasons a dataset cannot fully explain. In many real systems, what we observe is only a noisy shadow of what is actually happening. Kalman Filters and state-space models address this directly by separating measurements from the hidden state you really want to estimate. This is why these methods are a staple topic in applied forecasting and control, and also why they show up in many advanced modules in a data science course in Pune.

Why a state-space view is useful

Most basic forecasting methods treat a series as a single line of values to extrapolate. A state-space model reframes the problem: it assumes there is an internal state evolving over time, and your measurements are generated from that state with noise. This structure is powerful because it matches reality in many domains:

- A delivery fleet has a true vehicle position and velocity, but GPS observations are noisy.

- A sales pipeline has a true demand level, but weekly revenue signals contain random spikes and reporting delays.

- A manufacturing process has a true temperature and pressure profile, but sensors have calibration errors.

By modelling the hidden state explicitly, you can smooth noise, handle missing values gracefully, and produce uncertainty estimates rather than only point predictions.

The building blocks of a state-space model

A standard linear Gaussian state-space model has two equations:

- State (system) equation: how the hidden state evolves

- The state at time t depends on the previous state plus process noise.

- Example: “today’s underlying demand = yesterday’s demand + small random change”.

- Observation (measurement) equation: how measurements are produced

- The observed value at time t depends on the state plus measurement noise.

- Example: “reported sales = true demand + reporting noise”.

The key idea is that there are two types of noise:

- Process noise (the world changes in unpredictable ways).

- Measurement noise (your observation instrument is imperfect).

Choosing these noise levels is not cosmetic. It controls how quickly your filter adapts to changes versus how aggressively it smooths.

How the Kalman Filter works (recursive estimation)

The Kalman Filter is a recursive algorithm. That means it updates estimates step-by-step as new data arrives, without reprocessing the entire history each time. Each time step has two phases:

1) Predict

- Use the system equation to predict the next hidden state.

- Predict what the next measurement should look like.

- Update the predicted uncertainty (it usually increases because time introduces more unknowns).

2) Update (Correct)

- Compare the predicted measurement with the actual measurement (this difference is the innovation or residual).

- Compute the Kalman Gain, which decides how much to trust the new measurement versus the prior prediction.

- Update the state estimate and reduce uncertainty accordingly.

If measurement noise is high, the Kalman Gain is smaller, so the filter trusts the model more than the noisy data. If process noise is high, the filter expects rapid changes and will react more quickly to incoming measurements. This balance is exactly what practitioners learn when they go beyond basic forecasting in a data science course in Pune.

Where Kalman Filters shine in real work

Kalman Filters are not only for academic time series. They are widely used because they are fast, interpretable, and produce uncertainty-aware estimates.

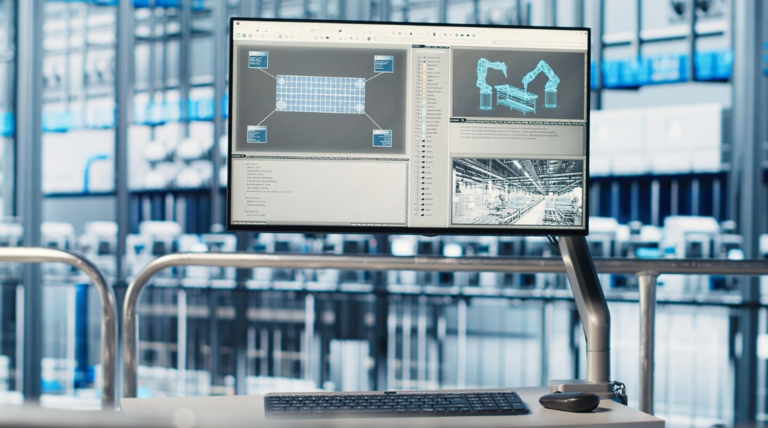

- Sensor smoothing and tracking: robotics, drones, GPS and IoT devices use Kalman Filters to estimate position, velocity, and orientation from noisy readings.

- Finance and econometrics: estimate a latent “trend” component in prices, inflation, or interest rates, separating signal from noise.

- Operations and supply chain: track latent demand and adjust forecasts when the observed sales are distorted by stockouts or promotions.

- Product analytics: estimate an underlying engagement level when daily metrics are noisy due to seasonality, campaigns, or tracking gaps.

A practical advantage is that state-space models naturally handle missing data. If a measurement is absent at time t, the algorithm can skip the update step and continue predicting, instead of failing or forcing imputation.

Practical guidance and common pitfalls

- Start simple: begin with a local level model (state is just the latent value) before adding trend, seasonality, or multiple states.

- Tune noise thoughtfully: if your filter overreacts to random spikes, measurement noise may be too low. If it ignores genuine shifts, process noise may be too low.

- Validate on behaviour, not only error metrics: check whether the filtered state makes sense to domain owners. A model that minimises RMSE but produces unstable hidden states can be hard to use.

- Know when the basic Kalman Filter is not enough: for non-linear systems or non-Gaussian noise, you may need extensions like the Extended Kalman Filter (EKF), Unscented Kalman Filter (UKF), or particle filters. These still follow the predict–update philosophy but adapt the math.

These points matter because the goal is not just forecasting; it is reliable estimation of the hidden truth behind noisy measurements, which is often the real business requirement discussed in a data science course in Pune.

Conclusion

Kalman Filters and state-space models provide a clean framework for time series problems where observations are noisy and the underlying system is partially hidden. By modelling how the state evolves and how measurements are generated, the Kalman Filter delivers fast, recursive estimates with uncertainty—making it practical for streaming data and operational decision-making. If your time series feels “too messy” for standard methods, a state-space approach is often the next logical step, and a strong skill to build through a data science course in Pune.